Recent advances in AI, particularly large language models (LLMs), are transforming how software processes and reasons about data. Modern applications increasingly combine deterministic computation with LLM reasoning to analyze trends, detect anomalies, and generate insights at scale. Semantic Kernel orchestrates these AI components, integrating models, prompts, and functions into structured pipelines that work seamlessly with traditional code. For instance, in sales analytics, it enables automated data analysis, AI-powered forecasting, narrative trend interpretation, and visualization, all within a single coordinated system.

This issue explores the core concepts of Semantic Kernel and examines key parts of the code used in the sales analytics application, highlighting how deterministic computation and AI reasoning work together.

What Semantic Kernel Is

Semantic Kernel (SK) is an open source AI orchestration framework that integrates LLM reasoning into C# code. It treats prompts, functions, and workflows as first-class building blocks, allowing deterministic code and LLM reasoning to run together in a single orchestrated pipeline. The Kernel serves as the execution engine, and any model with a chat completion API can be plugged in. SK also supports plugins, planners, memory stores, and other extensions, providing a flexible and predictable way to combine traditional code with AI reasoning.

Why Semantic Kernel Matters for Real Applications

Real applications involve full workflows: loading data, running computations, detecting patterns, generating visuals, explaining results, and triggering next steps. Semantic Kernel is designed for these orchestrated sequences.

It removes duplicated prompts, centralizes model configuration, and allows models to be swapped without rewriting code. Steps can be composed or chained while deterministic logic stays deterministic and AI reasoning remains consistent. This produces a maintainable and debuggable execution flow suitable for enterprise-scale AI applications.

Deep Technical Dive

Now that we understand what Semantic Kernel provides conceptually, let's take a closer look at how it works in practice. This section covers Kernel setup, namespaces, services, abstractions, and the execution flow behind your application.

1. Kernel Construction

At the center of every Semantic Kernel–based system is the Kernel itself. It orchestrates all AI services, function libraries, plugins, planners, and optional memory providers. The helper class demonstrates the typical pattern of creating a single shared instance for the entire application:

This ensures all modules share the same configuration, model provider, and dependency injection container. It functions like a centralized service in ASP.NET Core but is optimized for AI-driven workflows.

Several namespaces participate in this setup, including:

- Microsoft.SemanticKernel, the core orchestration layer

- Microsoft.SemanticKernel.ChatCompletion, which defines LLM chat abstractions

- Microsoft.Extensions.DependencyInjection, which manages service resolution

Within these namespaces you'll see important classes such as Kernel, KernelBuilder, IChatCompletionService, and ChatHistory. Together they define how models are registered, how prompts are constructed, and how results flow back through your application.

The lifecycle is modular: create a builder, register model providers and optional plugins, build the Kernel, and retrieve services as needed. Once constructed, the Kernel serves as the execution engine for all LLM reasoning.

2. How Semantic Kernel Handles Chat Completion

Semantic Kernel provides a unified abstraction layer over LLM chat APIs. Instead of calling the provider directly, the application retrieves the SK-managed chat interface:

This triggers several internal steps. The Kernel resolves the registered chat service, creates an execution context, and converts your ChatHistory into a provider-specific format. SK adapts system, user, assistant, and tool messages to the provider schema and issues the API call.

When the response returns, it is wrapped in a strongly typed ChatMessageContent object, ensuring consistent handling across providers. Core types like ChatHistory, ChatMessageContent, ChatRole, and IChatCompletionService shield your code from vendor-specific differences. ChatHistory supports multiple message types, keeping handling clean and structured while enabling rich conversational workflows.

The result is an architecture where deterministic logic and AI reasoning work together predictably, without scattering provider-specific code throughout your application.

How the Sales Analytics Application Uses SK

The application is built around four key modules:

- SalesDataLoader

- SalesTrendAnalyzer

- SalesChartGenerator

- SalesAnomalyDetector

Module 1: SalesDataLoader

This module is fully deterministic and loads raw data into a structured format. It reads a CSV file, parses each row, and constructs a list of SalesRecord objects:

The loader retrieves the CSV path, checks that the file exists, and then loads the sales data into memory:

There is no AI involvement at this stage. This module provides a reliable foundation for SK-powered components downstream.

Module 2: SalesTrendAnalyzer

Here, deterministic math meets LLM reasoning. It first computes totals, averages, and extremes:

The Kernel then orchestrates narrative interpretation:

Please consider that:

- Temperature controls creativity and variability in the model's responses. A lower value (0.3) keeps the output more focused and deterministic, ideal for business reporting.

- MaxTokens limits the response length. Setting it to 1000 ensures the model can provide a detailed analysis without cutting off prematurely.

This approach combines precise calculations with human-like interpretation, coordinated cleanly by the Kernel.

Module 3: SalesChartGenerator

This module uses AI for forecasting and deterministic code for visualization. The prompt instructs the LLM to return predicted values:

The model returns a comma separated list, which is parsed into decimals:

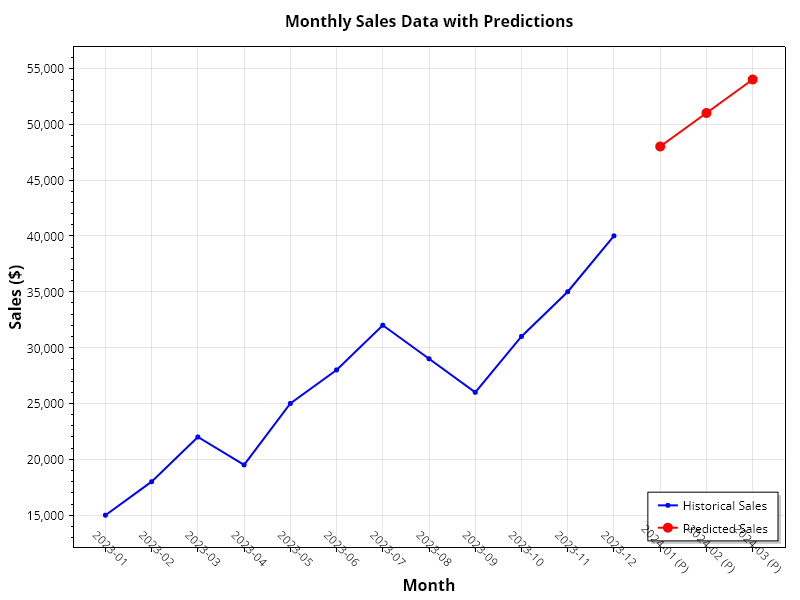

Once the future values are available, the chart is produced using ScottPlot:

The Kernel provides prediction reasoning, while all computation and rendering remain deterministic. The resulting chart visualizes both the historical sales data and the AI-predicted values for the next three months.

Module 4: SalesAnomalyDetector

This module combines statistical anomaly detection with AI explanations. It computes mean and standard deviation:

Values that deviate significantly from the mean are collected as anomalies:

Once the anomalies are identified, the model provides a natural language explanation of what might be happening:

This approach allows machine level detection to be paired with human like reasoning, giving both the precision of numeric methods and the interpretability of an expert explanation.

Architectural Benefits

The structure delivers clear advantages. Each module is independent, deterministic calculations remain deterministic, and AI is used only where it adds value. All prompts and model configurations flow through a single Kernel, allowing models to be replaced without changing code. This keeps the system maintainable, testable, and predictable. AI acts as a clean extension rather than scattered logic.

Opportunities for Future Expansion

Modules could be converted into Kernel plugins for improved modularity. Vector memory could enable semantic search across historical records. Automated PDF reporting could combine charts and AI summaries. A natural language query layer would allow flexible questions, and the SK Planner or tool calling could enable more advanced analytic workflows.

Final Notes

The sales analytics application showed how Semantic Kernel orchestrated a hybrid AI pipeline that included data loading, computation, LLM interpretation, forecasting, charting, and anomaly explanation. SK managed orchestration and prompting, while deterministic modules handled computation and visualization. The architecture was modular, predictable, and easy to evolve, reflecting SK's strength in blending traditional computation with AI reasoning.

Explore Semantic Kernel this week and run the Sales Analytics source code locally.

See you in the next issue.

Stay curious.

Join the Newsletter

Subscribe for exclusive insights, strategies, and updates from Elliot One. No spam, just value.