Modern AI systems are increasingly defined by how they are built, orchestrated, and deployed in real-world environments. Topics such as AI-assisted coding, hybrid AI workflows, Retrieval-Augmented Generation (RAG), prompt engineering, and context engineering all reflect this shift toward system-level design.

This issue steps back to examine a more fundamental transition. The most significant difference between classical machine learning and modern AI systems is not model size, parameter count, or benchmark performance. It is a change in system architecture, and it has fundamentally reshaped how intelligence is designed, deployed, and evolved in software systems.

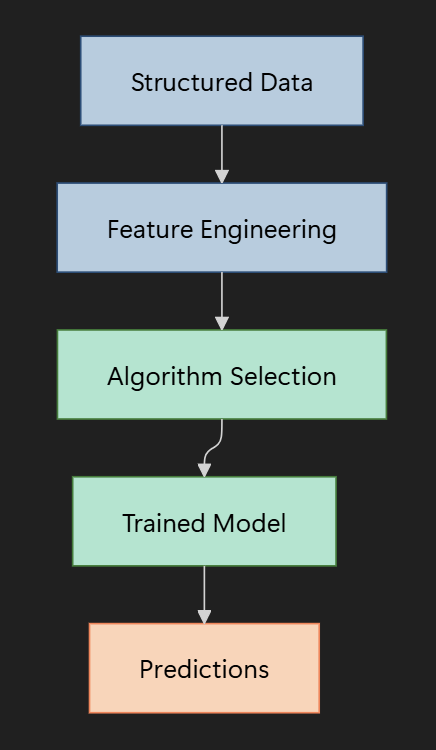

Classical Machine Learning Was Algorithm-Centric

Classical machine learning focused on selecting the correct algorithm for a clearly defined task.

The workflow was linear, stable, and well understood:

- Collect structured data

- Engineer features

- Choose an algorithm

- Train the model

- Deploy predictions

Most of the system intelligence lived directly inside the trained model weights.

Once deployed, the model was effectively static. Any meaningful change in behavior required retraining, validation, and redeployment.

This made classical ML powerful, efficient, and predictable, but also rigid.

Categories of Classical Machine Learning Algorithms

Classical machine learning algorithms are categorized by how they learn from data. Each category assumes a fixed problem definition and a specific data format.

Supervised Learning

Supervised learning uses labeled data, where the correct output is known during training.

It is primarily used for prediction and classification.

Common examples include:

- Linear Regression

Predicts continuous values such as prices or demand. - Logistic Regression

Performs binary classification such as fraud detection or churn prediction. - Support Vector Machines (SVM)

Effective when decision boundaries are well defined. - Decision Trees

Rule-based models with high interpretability. - Random Forests

Ensembles of decision trees that improve stability and generalization. - Gradient Boosting Models

Frameworks such as XGBoost and LightGBM that optimize performance through sequential learning.

This category dominates traditional business ML because outcomes are measurable and evaluation is straightforward.

Unsupervised Learning

Unsupervised learning works with unlabeled data.

The goal is to discover structure or patterns without predefined targets.

Common examples include:

- K-Means Clustering

Groups data points by similarity. - Hierarchical Clustering

Builds nested groupings of data. - Principal Component Analysis (PCA)

Reduces dimensionality while preserving variance. - Autoencoders

Neural models used for compression and anomaly detection.

Unsupervised learning is often used for exploration, segmentation, and feature extraction.

Semi-Supervised Learning

Semi-supervised learning combines small amounts of labeled data with large volumes of unlabeled data.

It is useful when labeling is expensive or slow.

Examples include:

- Label Propagation

Spreads labels across similarity graphs. - Self-Training Models

Use confident predictions as pseudo-labels in iterative training.

This category sits between supervised and unsupervised learning.

Reinforcement Learning

Reinforcement learning trains agents through interaction with an environment.

Instead of labels, learning is driven by rewards and penalties.

Common examples include:

- Q-Learning

Learns optimal actions through value estimation. - Deep Q Networks (DQN)

Combine neural networks with reinforcement learning. - Policy Gradient Methods

Directly optimize action policies.

While powerful, reinforcement learning is uncommon in traditional business systems due to complexity and operational cost.

Classical ML Architecture

Classical ML systems follow a linear pipeline.

Once deployed, the system does not adapt unless retrained. This architecture works best when the problem is stable, well bounded, and measurable.

A Classical ML Example in ML.NET

A simple binary classification pipeline using logistic regression:

var ml = new MLContext();

var data = ml.Data.LoadFromTextFile<InputData>(

path: "data.csv",

hasHeader: true,

separatorChar: ',');

var pipeline = ml.Transforms

.Concatenate("Features", nameof(InputData.Age), nameof(InputData.Income))

.Append(ml.BinaryClassification.Trainers.LbfgsLogisticRegression());

var model = pipeline.Fit(data);This approach is fast, lightweight, and highly interpretable.

For problems such as lead scoring, churn prediction, or basic forecasting, this remains the correct solution.

The Limits of Classical Machine Learning

Classical ML begins to struggle when:

- Data is unstructured, such as text, images, or audio

- Requirements change frequently

- Tasks cannot be fully specified upfront

- Context matters across interactions

- Reasoning, abstraction, or synthesis is required

In these scenarios, feature engineering becomes the bottleneck. Accuracy is no longer the primary constraint. Adaptability is.

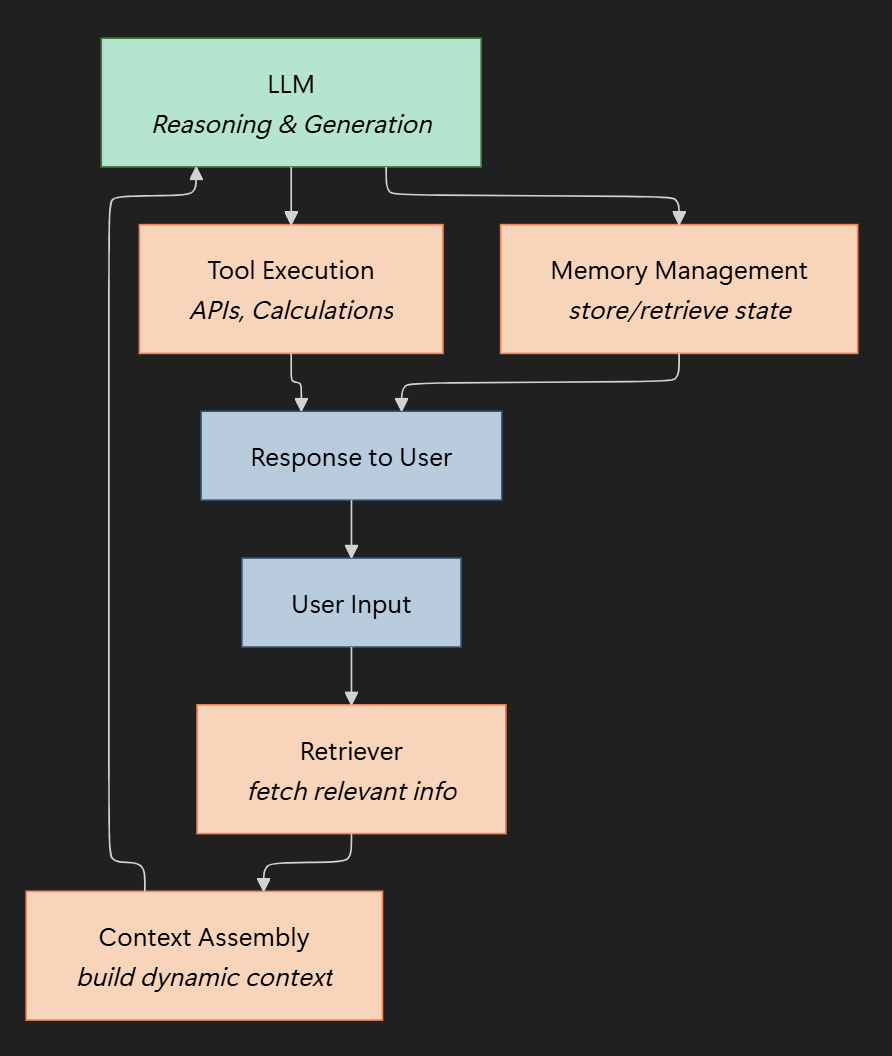

Modern AI Is System-Centric

Modern AI shifts the focus away from individual algorithms.

Intelligence emerges from how components are composed.

Typical system components include:

- Large Language Models

- Embedding models

- Vector databases

- Retrieval pipelines

- Tool execution and function calling

- Memory and context management

The model is no longer the system.

It is one component inside a broader architecture.

Modern AI Architecture

Modern AI systems are orchestration-driven.

System behavior changes by modifying context, tools, or data, not by retraining the model.

Classical ML vs Modern AI Systems

The difference is architectural, not merely mathematical.

| Aspect | Classical ML | Modern AI Systems |

|---|---|---|

| Training | Task-specific | General pretraining |

| Input | Structured features | Natural language |

| Adaptation | Retraining | Prompt and context |

| Reasoning | Not supported | Emergent |

| Deployment | Single endpoint | Orchestrated system |

This changes how software is built, deployed, and evolved.

Retrieval Augmented Generation Changes the Data Flow

Retrieval Augmented Generation replaces feature engineering with runtime retrieval.

Instead of encoding knowledge into model weights, knowledge is fetched dynamically.

The RAG flow:

- User query

- Embedding generation

- Vector search

- Context assembly

- LLM response

Knowledge becomes an architectural concern rather than a training concern.

Minimal RAG Example in C#

A simplified RAG flow using modern C# services:

var embedding = await embeddingGenerator.GenerateAsync(query);

var documents = await vectorStore.SearchAsync(

embedding,

limit: 5);

var prompt = $"""

Answer the user question using the context below.

Context:

{string.Join("

", documents)}

User:

{query}

""";

var response = await chatService.GetChatMessageContentAsync(prompt);Updating the vector store immediately changes system behavior. No retraining is required.

Why RAG Replaced Fine-Tuning in Most Systems

Fine-tuning modifies the model itself.

RAG modifies the context provided to the model.

| Feature | Fine-Tuning | RAG |

|---|---|---|

| Cost | High | Low |

| Data freshness | Static | Real-time |

| Transparency | Black box | Source-aware |

| Implementation | ML engineering | Software architecture |

For most production systems, RAG scales better operationally.

From Linear Pipelines to Agent Loops

Classical ML pipelines are linear and stateless.

Modern AI systems are iterative and stateful.

while (!goalReached)

{

var plan = await planner.CreateAsync(goal, memory);

var result = await executor.ExecuteAsync(plan);

await memory.StoreAsync(result);

}This enables planning, execution, reflection, and correction.

There is no equivalent pattern in classical ML.

MCP Introduces a New Integration Layer

The Model Context Protocol (MCP) standardizes how AI systems interact with tools, data sources, and memory. Instead of tightly coupled, hard-coded integrations, systems become composable and interoperable. Classical ML had no equivalent abstraction because the "tools" were usually baked into the preprocessing code.

When Classical ML Is Still the Right Choice

Classical ML remains superior when:

- Data is structured and stable

- Latency must be extremely low

- Interpretability is mandatory

- Regulatory constraints apply to model weights

- Infrastructure resources are limited

Common examples include credit scoring, fraud detection, and high-frequency trading.

The Future Is Hybrid

Modern production systems combine both approaches. A common stack looks like this:

- Classical ML: Used for signals, scoring, and anomaly detection.

- LLMs: Used for reasoning, language understanding, and synthesis.

- RAG: Used for grounding the system in private or fresh data.

- Agents: Used for task automation and multi-step execution.

- MCP: Used as the integration layer between these components.

This is not a replacement.

It is a higher-level composition.

Final Notes

Classical machine learning taught systems how to predict outcomes from structured data. Modern AI systems extend this foundation by enabling reasoning, interaction, and adaptation through orchestration, context, and retrieval. Understanding both paradigms, and knowing when to apply each, is now a core requirement for engineers designing reliable, scalable AI systems.

This shift is not about replacing classical machine learning, but about composing it within larger, more flexible architectures.

See you in the next issue.

Stay curious.

Join the Newsletter

Subscribe for AI engineering insights, system design strategies, and workflow tips.