Modern AI systems are no longer isolated models responding to one-off prompts. The next generation of intelligent applications relies on context-aware integration, deterministic tool execution, and dynamic reasoning. The Model Context Protocol (MCP) provides a standardized way to connect AI models with external tools, APIs, and services, enabling fully orchestrated AI workflows. In this issue, we explore a production-ready MCP setup implemented in C# and .NET 9, integrating a simple time tool with Ollama as the LLM backend.

The design emphasizes three principles:

- Deterministic Tool Execution through MCP over STDIO

- Dynamic LLM Integration through Ollama HTTP API

- Clean Separation of Protocol, Logging, and AI Reasoning

The result is a robust and maintainable architecture that can serve as the foundation for larger, tool-driven AI systems.

System Overview

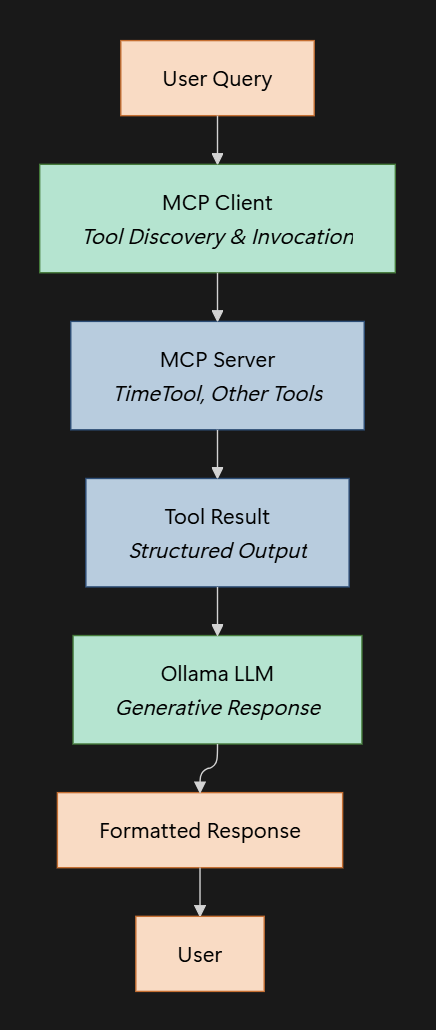

The workflow consists of three core components:

- MCP Server hosting deterministic tools over STDIO

- MCP Client discovering and invoking tools dynamically

- LLM client wrapping tools into AI-driven conversation

Users query the AI system. The MCP client identifies relevant tools and invokes them. Results are then passed to the LLM, which produces a user-friendly response. This architecture preserves a clean separation between deterministic components and generative reasoning.

MCP Server Implementation

The server runs as a standalone .NET console application. It is responsible for exposing deterministic tools to clients using MCP over STDIO.

dotnet new console -n MCPServer

cd MCPServer

dotnet add package ModelContextProtocol --version 0.1.0-preview.14The server setup focuses on safe logging and tool discovery:

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Logging;

var builder = Host.CreateApplicationBuilder();

// Redirect logs to STDERR to preserve MCP protocol integrity

builder.Logging.ClearProviders();

builder.Logging.AddConsole(options =>

{

options.LogToStandardErrorThreshold = LogLevel.Trace;

});

builder.Services

.AddMcpServer()

.WithStdioServerTransport()

.WithToolsFromAssembly();

await builder.Build().RunAsync();namespace MCPServer

{

[McpServerToolType]

public static class TimeTool

{

[McpServerTool, Description("Get the current time for a city")]

public static string GetCurrentTime(string city) =>

$"It is {DateTime.Now:HH:mm} in {city}.";

}

}Key aspects of the server design:

- All logging is redirected to stderr to prevent protocol corruption

- Tools are discovered automatically from the assembly

- The time tool returns formatted, parseable output suitable for LLM consumption

This setup can be extended to include any number of deterministic MCP tools while maintaining protocol safety.

MCP Client Implementation

The client is responsible for launching the MCP server, discovering tools, invoking them, and integrating results into an AI workflow. The client uses the StdioClientTransport for deterministic communication.

var stdioTransport = new StdioClientTransport(

new StdioClientTransportOptions

{

Name = "Time MCP Server",

Command = @"..\..\..\..\MCPServer\bin\Debug\net9.0\MCPServer.exe"

},

loggerFactory);

await using var mcpClient = await McpClientFactory.CreateAsync(

stdioTransport,

new McpClientOptions

{

ClientInfo = new() { Name = "Time Client", Version = "1.0.0" }

},

loggerFactory);

// Discover available tools

IList<McpClientTool> mcpTools = await mcpClient.ListToolsAsync();

foreach (var t in mcpTools)

Console.WriteLine($"{t.Name} — {t.Description}");

// Call the Time tool

var timeTool = mcpTools.FirstOrDefault(t => t.Name == "GetCurrentTime");

var toolResult = timeTool != null

? string.Join("

", (await timeTool.CallAsync(new Dictionary<string, object>

{ ["city"] = "Illzach, France" })).Content.Select(c => c.Text))

: "Tool not found!";The MCP client ensures dynamic discovery and invocation of tools without hardcoding endpoints or method signatures. This decouples the AI model from deterministic functionality, allowing the architecture to scale safely.

LLM Integration

After retrieving deterministic results from the MCP server, these outputs are passed to the LLM for reasoning and conversation. The following implementation wraps an HTTP-based Ollama client:

var ollamaClient = new OllamaHttpChatClient(new Uri("http://localhost:11434/"), "mistral:7b");

var messages = new List<ChatMessage>

{

new(ChatRole.System, "You are a helpful assistant that can use MCP tools."),

new(ChatRole.User, "What is the current (CET) time in Illzach, France?"),

new(ChatRole.Assistant, $"According to the MCP tool: {toolResult}")

};

string aiChatResponse = await ollamaClient.GetChatResponseAsync(messages);

Console.WriteLine(aiChatResponse);The architecture ensures:

- Deterministic results from tools are passed directly to the LLM

- LLM output is grounded in real data rather than hallucinated

- Multiple tools can be orchestrated within the same conversation context

This pattern allows complex, multi-tool workflows while maintaining a predictable response flow.

Architecture Diagram

The architecture of a modern MCP-based AI workflow can be summarized in three distinct layers:

MCP Server

Hosts deterministic tools such as TimeTool and exposes them over the STDIO transport protocol. The server is responsible for executing tool functions reliably and returning structured outputs. Logs are routed to STDERR to avoid protocol corruption, ensuring robust, traceable communication with clients.

MCP Client

Discovers available MCP tools, invokes functions based on AI requests, and collects results. It acts as an orchestration layer between the user query and the deterministic tools, maintaining separation between tool execution and generative reasoning.

LLM Client (Ollama)

Integrates deterministic results from the MCP client into AI-driven responses. The LLM can use tool outputs as context, generating coherent, user-friendly answers while maintaining auditability.

This layered separation ensures:

- Modularity – Each component has a single, well-defined responsibility.

- Deterministic vs Generative Separation – Tool execution remains predictable, while LLM reasoning is flexible but context-bound.

- Traceability and Auditability – Tool outputs and AI responses can be logged and inspected independently, which is essential for enterprise applications.

The following flowchart illustrates this architecture:

Key Advantages

- Fully Local and Deterministic: MCP tools execute in isolation without introducing noise

- Dynamic Discovery: Clients automatically detect new tools

- Safe LLM Integration: All outputs are grounded in verified tool results

- Scalable Architecture: New tools and workflows can be added without modifying client logic

Potential Enhancements

Future improvements could include:

- SSE transport for higher throughput

- Authentication and secure communication for production environments

- Plugin-based dynamic tool injection

- Multi-agent orchestration with prioritized tool selection

Final Notes

This issue demonstrated a modern MCP architecture in C# that separates deterministic tool execution from generative reasoning. By combining MCP over STDIO with Ollama integration, the system achieves a maintainable, scalable, and predictable AI workflow. Deterministic layers provide safety and auditability, while the generative layer allows flexibility for intelligent conversation.

Explore the source code at the GitHub repository.

See you in the next issue.

Stay curious.

Join the Newsletter

Subscribe for AI engineering insights, system design strategies, and workflow tips.