Most AI discussions jump straight to autonomous agents, planners, and multi-agent swarms. In practice, however, the majority of production-ready AI systems start with something far simpler: a reactive agent that can reason, invoke tools deterministically, and operate locally without external dependencies.

In this issue, we explore a concrete, minimal example of such a system: a local Semantic Kernel ChatCompletionAgent running on .NET 10 with Ollama, extended using class-based function calling. The result is a clean, predictable, and fully local AI agent that bridges natural language reasoning and deterministic execution.

Why Reactive Agents Matter

Before systems can plan, reflect, or self-correct, they must reliably answer a more fundamental question:

Can the model correctly decide when to act, and can it act deterministically when it does?

Reactive agents solve exactly this problem. They:

- Respond directly to user input

- Invoke tools only when appropriate

- Operate in a single-step request → response loop

- Keep humans firmly in the loop

This architecture avoids the brittleness and unpredictability of premature autonomy while still delivering real utility.

Agent vs Agentic: Clearing the Terminology Confusion

One source of persistent confusion in AI discourse is the overloaded term agent.

In Semantic Kernel, an agent is a structured abstraction around an LLM that includes:

- Identity

- Instructions

- Tools

- Execution behavior

In AI research, agentic systems typically imply:

- Explicit goals

- Planning and task decomposition

- Multi-step execution loops

- Persistent memory and self-evaluation

This project implements the former, not the latter.

It is best described as a:

Reactive, tool-augmented LLM agent

There is no planner, no autonomy, and no hidden execution loop. This simplicity is intentional and valuable.

System Overview

The project consists of three core elements:

1. Semantic Kernel Agent

A ChatCompletionAgent that defines the AI’s identity, rules, and execution behavior.

2. Deterministic Tool (Function)

A class-based plugin exposing a GetCityTime function via [KernelFunction].

3. Local LLM Runtime

Ollama running a local llama3.2:3b model for reasoning and language generation.

The execution flow is straightforward:

User input → Agent reasoning → Optional tool invocation → Final response

There is no hidden orchestration layer and no external service dependency.

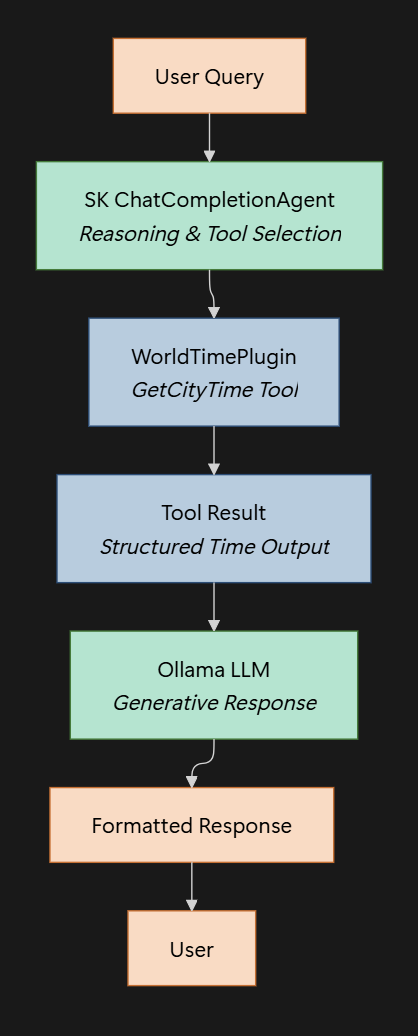

Architecture Diagram

The following flowchart illustrates the system architecture:

This architecture enables:

- Modularity – Each component has a well-defined responsibility.

- Deterministic vs Generative Separation – Tool execution is predictable, while LLM reasoning remains flexible but context-bound.

- Traceability and Auditability – Structured outputs and responses can be logged and inspected independently.

Key Advantages

- Fully Local and Deterministic: The agent and tool execute locally with no external dependencies, producing predictable results.

- Clear Separation of Concerns: Reasoning, deterministic execution, and tool logic are distinct, simplifying debugging and extension.

- Safe LLM Integration: Generative outputs are grounded in structured tool results, reducing hallucination risk.

- Extensible Architecture: Additional tools or plugins can be added without modifying core agent logic.

Defining the Agent

The agent is defined using Semantic Kernel’s agent abstraction:

var agent = new ChatCompletionAgent

{

Name = "TimeAssistant",

Instructions = "...",

Kernel = kernel,

Arguments = new KernelArguments(

new PromptExecutionSettings

{

FunctionChoiceBehavior = FunctionChoiceBehavior.Auto()

})

};Two design choices matter here:

- Explicit instructions constrain behavior and output format

- Automatic function selection allows the model to decide when tool invocation is required

This keeps control logic declarative rather than procedural.

Class-Based Function Calling

Instead of inline lambdas or ad-hoc delegates, the project uses a class-based plugin:

public class WorldTimePlugin

{

[KernelFunction("GetCityTime")]

public string GetCityTime(string city)

{

...

}

}This approach provides:

- Clear separation between reasoning and execution

- Testable, deterministic logic

- Straightforward extensibility

The plugin maps cities to time zones and returns a formatted, predictable result suitable for LLM consumption.

Deterministic Behavior Through System Instructions and Context Design

While Semantic Kernel enables tool invocation and Ollama provides local reasoning, determinism is enforced through instructions.

This is where prompt engineering ends and context engineering begins.

The following instruction block defines the behavioral contract of the agent:

Instructions = """

You are a precise World Time Assistant.

Your primary goal is to provide the current time for requested cities.

RULES:

1. ALWAYS use the 'GetCityTime' tool when a user mentions a city.

2. ALWAYS format the time in 24-hour HH:MM format (e.g., 14:30 instead of 2:30 PM).

3. If a city is not supported by the tool, your response must be exactly: 'I don't know.'

4. Keep responses brief and professional.

"""This instruction block is not a casual prompt.

It is behavioral infrastructure.

Why This Matters

- Prevents hallucinated timestamps

- Enforces tool-first reasoning

- Produces machine-safe outputs

- Creates predictable failure modes

In effect, instructions become part of the system architecture, just like types, interfaces, and protocols in traditional software.

Fully Local Execution with Ollama

All reasoning and tool selection runs locally via Ollama:

builder.AddOllamaChatCompletion(

"llama3.2:3b",

new Uri("http://localhost:11434"));This enables:

- No API keys

- No cloud dependencies

- Full data privacy

- Reproducible behavior

Local-first AI architectures are increasingly important for both cost control and trust.

Why This Architecture Works

This project deliberately avoids overengineering. Its strength lies in its constraints.

Key properties:

- Deterministic tool execution

- Clear separation of concerns

- Human-in-the-loop by default

- Minimal but extensible foundation

From here, it is straightforward to add:

- Memory

- Planning agents

- Multi-agent coordination

- Persistent state

But none of those are required to build something useful today.

Final Notes

Not every AI system needs autonomy; most need reliability. Reactive agents with deterministic tools represent a pragmatic midpoint between simple chatbots and fully agentic systems, making them easier to reason about, easier to debug, and far easier to deploy responsibly. This project is a reminder that good AI engineering starts with clear boundaries, not with buzzwords.

Explore the source code at the GitHub repository.

See you in the next issue.

Stay curious.

Join the Newsletter

Subscribe for AI engineering insights, system design strategies, and workflow tips.