Most AI prototypes fail in production for a boring reason. They treat every input as a single intent and let the model decide the path. Real users do not do that. They mix requests, omit details, and jump between topics mid sentence. The result is tool misuse, incorrect actions, and confusing answers.

This issue builds a local-first .NET intent router that enforces deterministic control flow around an LLM. The model remains useful, but it never owns routing, tool execution, or safety boundaries.

The design has one goal: predictable behavior under messy input.

What You Are Building

A single console app with a clear pipeline:

- Validate input deterministically

- Apply deterministic policies for security and trivial compute

- Detect multi-intent and ask a clarifying question (no tools)

- Classify intent using rules first, embeddings only for disambiguation

- Execute allowlisted tools with argument validation and timeouts

- Generate grounded answers from tool output only

This is not "agentic." It is controlled flow.

System Overview

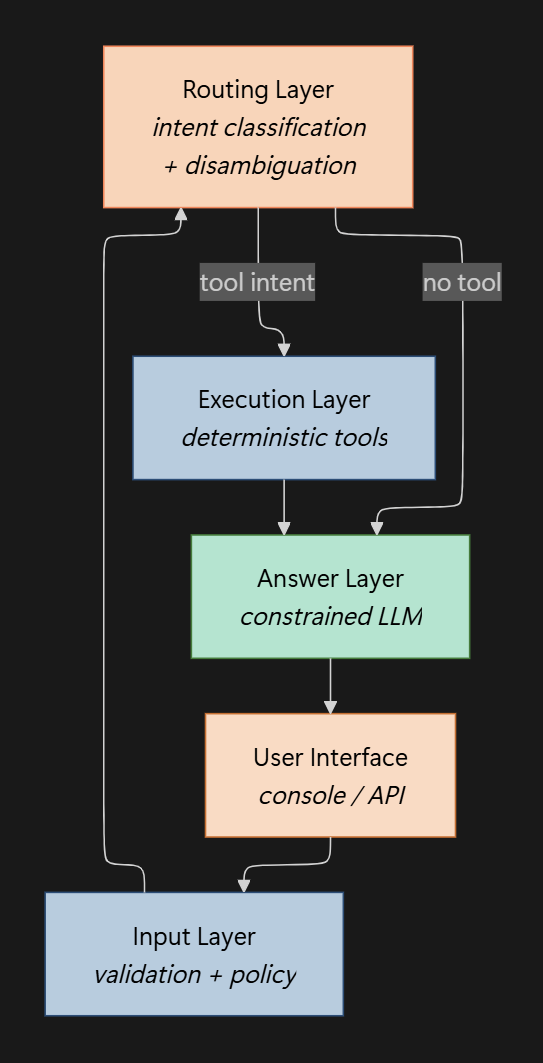

The system is intentionally layered so probabilistic components never control execution directly. Think of it as a deterministic shell around an LLM.

1) Input layer (deterministic)

- InputValidator blocks invalid or suspicious input early

- Policy handles hard refusals and trivial compute without any model calls

2) Routing layer (orchestration + deterministic signals)

- IntentClassifier runs rules first, then uses embeddings only to disambiguate

- IntentRouter enforces one-intent-per-turn and decides the execution path

3) Execution layer (deterministic)

- ToolRegistry is the only place tools execute

- It enforces allowlists, argument validation, and strict timeouts

4) Answer layer (AI, but constrained)

- GroundedAnswerComposer generates responses from tool output only for tool-backed intents

- For non-tool intents, it produces a short response and asks one question if context is missing

The diagram below shows the layered architecture and deterministic control flow used in this system.

All of this is wired through standard .NET hosting and DI:

var host = Host.CreateDefaultBuilder(args)

.ConfigureServices((ctx, services) =>

{

services.Configure<AppConfig>(ctx.Configuration.GetSection("App"));

services.AddSingleton<OllamaChatClient>(...);

services.AddSingleton<OllamaEmbeddingClient>(...);

services.AddSingleton<IReadOnlyList<KnowledgeDocument>>(_ => SeedRunbooks.Create());

services.AddSingleton<SemanticSearchEngine>(sp =>

SemanticSearchEngine.Build(

sp.GetRequiredService<IReadOnlyList<KnowledgeDocument>>(),

sp.GetRequiredService<OllamaEmbeddingClient>(),

sp.GetRequiredService<ILogger<SemanticSearchEngine>>()));

services.AddSingleton<ToolRegistry>();

services.AddSingleton<GroundedAnswerComposer>();

services.AddSingleton<IntentClassifier>();

services.AddSingleton<IntentRouter>();

services.AddSingleton<InputValidator>();

})

.Build();This is production-shaped. Configuration is explicit, components are testable, and side effects are centralized.

Configuration That Matters

The router is governed by a small configuration object. Two values control whether embeddings should ever influence routing.

public sealed class IntentRoutingConfig

{

public bool UseEmbeddingDisambiguation { get; set; } = true;

public double EmbeddingConfidenceThreshold { get; set; } = 0.45;

public double AmbiguityMargin { get; set; } = 0.05;

}This is important because embedding routing is probabilistic. If the top match is weak or the top two are too close, you treat the request as ambiguous and avoid tool execution.

Step 1: Input validation is a deterministic gate

The validator rejects empty inputs, overly long messages, and obvious prompt injection patterns. This prevents wasteful model calls and reduces attack surface.

if (lower.Contains("ignore previous instructions") ||

lower.Contains("reveal system prompt") ||

lower.Contains("developer message") ||

lower.Contains("print the hidden"))

{

return ValidationResult.Fail("I can't process that request.");

}This is not "security solved." It is the first gate. The point is to fail early for obvious bad inputs.

Step 2: Deterministic policy before any model

Some requests should never reach the model. Security sensitive intent is handled as a hard refusal. Simple arithmetic is handled locally.

var deterministic = Policy.TryHandleDeterministically(input);

if (deterministic is not null)

{

Console.WriteLine($"Assistant: {deterministic}");

continue;

}This separation is a production discipline. Probabilistic components must not be responsible for correctness or security boundaries.

Step 3: Multi-intent detection before final routing

Users often mix requests: "What time is it in London, also Redis is down." If you route that straight into one tool, you will be wrong for at least half the request. This is where multi-intent detection becomes a control flow feature.

Your router checks rule-level matches first and refuses to proceed until the user chooses one path.

var ruleMatches = _classifier.DetectRuleMatches(input);

if (ruleMatches.Count >= 2)

{

var options = string.Join(", ", ruleMatches.OrderBy(x => x).Select(FormatIntentOption));

return new IntentRoutingResult(

Intent.Unknown,

0.40,

RoutingPath.RulesOnly,

$"I can only handle one request at a time. Which do you want: {options}?");

}Key point: no tools are executed during ambiguity. You ask one short clarifying question and wait.

Step 4: Rules-first intent classification

The classifier uses deterministic rules as the primary signal. If rules are confident, you do not consult embeddings.

var rules = ClassifyWithRules(input);

if (rules.Intent != Intent.Unknown && rules.Confidence >= 0.80)

{

return (rules.Intent, rules.Confidence, RoutingPath.RulesOnly);

}This keeps routing explainable and stable. Rules are also much cheaper and easier to debug than model-driven routing.

Step 5: Embeddings only for disambiguation

When rules are uncertain, you allow embeddings to break ties. The approach is deliberately simple: compare the query embedding to a small set of intent prototypes.

private static readonly (Intent Intent, string Prototype)[] Prototypes =

[

(Intent.WorldTime, "user asks for current time in a specific city"),

(Intent.RunbookSearch, "user describes an incident and wants a runbook or troubleshooting steps"),

(Intent.GeneralOpsAdvice, "user asks general production operations advice for AI systems")

];The classifier enforces two safety controls:

- If top confidence is below the threshold, embeddings do not override the rule result.

- If top two are too close, treat as ambiguous and route to a safe non-action path.

if (topConf < _cfg.IntentRouting.EmbeddingConfidenceThreshold)

return (rules.Intent, rules.Confidence, RoutingPath.RulesPlusEmbeddings);

if (margin < _cfg.IntentRouting.AmbiguityMargin)

return (Intent.GeneralOpsAdvice, 0.50, RoutingPath.RulesPlusEmbeddings);This is the core production pattern. Embeddings can help, but they are never trusted blindly.

Step 6: Tool execution is allowlisted and validated

Tools are executed through ToolRegistry. This is where you enforce deterministic guardrails for tool usage.

Tool execution controls:

- Tool allowlist

- Argument validation per tool

- Strict timeout

- Safe error messages

private static readonly HashSet<string> AllowedTools = new(StringComparer.OrdinalIgnoreCase)

{

"WorldTime.GetCityTime",

"Runbooks.Search"

};

if (!AllowedTools.Contains(plan.ToolName))

return ToolExecutionResult.Fail("Tool not allowed.");Argument validation prevents tool misuse from malformed extraction:

if (args is null || !args.TryGetValue("city", out var cityObj))

return ToolExecutionResult.Fail("Missing required argument: city");Timeouts protect your system when models are cold or embeddings are slow:

using var timeout = new CancellationTokenSource(TimeSpan.FromMilliseconds(_cfg.ToolTimeoutMs));

using var linked = CancellationTokenSource.CreateLinkedTokenSource(ct, timeout.Token);Step 7: Grounded answers prevent hallucination

For tool-backed intents like runbook search, the final response is composed using only tool output.

var system = """

You are a guardrailed assistant for engineering teams.

Rules:

- Answer using ONLY the TOOL_OUTPUT below.

- If TOOL_OUTPUT does not contain enough info, say: "I don't know."

- Keep it concise (max 6 sentences).

""";This is one of the highest-leverage reliability techniques. If retrieval fails, you get a controlled failure mode instead of a confident fiction.

World time is even simpler. The tool returns the final string and the router prints it. No LLM is needed for the final answer.

Runbook Search Stays Deterministic

Semantic search is built once at startup and stays deterministic at query time. Embeddings are used only to represent text. Ranking is pure cosine similarity.

var results = _index

.Select(e => (e.Doc, Score: CosineSimilarity(e.Vec, q)))

.OrderByDescending(x => x.Score)

.Take(topK)

.ToList();This keeps retrieval predictable and inspectable. The model does not "decide" which doc is correct. The math does.

Example Interaction Behavior

Input: "What time is it in London"

- Rule matches WorldTime

- Extract city

- Call WorldTime.GetCityTime

- Return the deterministic tool result

Input: "Redis is down, error rate is spiking"

- Rule matches RunbookSearch

- Call Runbooks.Search

- Compose a grounded response from runbook excerpts

Input: "What time is it in London, also Redis is down"

- Multi-intent detected

- No tools

- Clarifying question: choose time or incident search

That last case is the difference between a demo and a system.

Why This Architecture Works

This project is small, but the structure maps directly to production constraints:

- Guardrails before models

- Rules-first routing

- Embeddings only when needed

- One intent per turn

- Tool allowlists, arg validation, and timeouts

- Grounded answers for tool-backed paths

The LLM remains valuable, but it never becomes the control plane.

Potential Enhancements

This system can be extended incrementally:

- Replace the rule keyword matching with a small curated keyword dictionary per intent

- Add structured logging for intent, confidence, and tool latency

- Persist the runbook index and document vectors to avoid startup embedding cost

- Add an explicit "clarify" intent instead of overloading GeneralOpsAdvice as a fallback

- Add an evaluation harness like Issue #13 to gate routing regressions and tool misuse

Final Notes

Intent routing is not a UX feature. It is a reliability feature. The most production-aligned AI systems treat the model as a component, not as the orchestrator. If you can make routing deterministic, tool execution constrained, and answers grounded, you can ship AI features without gambling your system behavior.

Explore the source code at the GitHub repository.

See you in the next issue.

Stay curious.

Join the Newsletter

Subscribe for AI engineering insights, system design strategies, and workflow tips.